Introduction

Tivoli Business Service

Manager can calculate amazing things for you, if you only need them. This is

thanks to the powerful rules engine being the key part of TBSM as well as the

Netcool/Impact policies engine running

just under the hood together with every TBSM edition. You can present

your calculation results later on on a dashboard or in reports, depending if

you think of a real time scorecard or historical KPI reports.

In this article, I’ll show

how you can make TBSM to process its inputs by triggering various template

rules in various ways. It is something that isn’t really well documented or at

least it isn’t well documented in a single place.

Status, numerical and text rules triggers

In this chapter I’ll show three kinds of rules (status,

numerical and text) and I’ll show how TBSM triggers them, so processes the

input data, runs them and returns the outputs.

In general these three techniques

always kick off TBSM rules based on

the same two conditions: time and a new value. Here they are:

- Omnibus Event

Reader service (for incoming status rules or numerical rules or text rules

based on Omnibus ObjectServer as the data feed)

- TBSM data

fetchers (for numerical or text rules with fetcher based data feed)

TBSM/Impact services of type Policy activator (using Impact policy with PassToTBSM function

calls to send data to numerical or text rules)

|

| Figure 1. Three techniques of

triggering status, numerical and text rules in TBSM |

Make note.

There are ITM policy fetchers as well as highly undocumented any-policy

fetchers configurable in TBSM, I’ll not comment on them in this material,

however their basis is just like any fetcher: the time.

Let’s take a look at the

first type of rules triggers, the most popular, the OMNIbus Event Reader

service in Impact, widely used in TBSM to process events stored in ObjectServer

against the service trees.

OMNIbus Event

Reader

Omnibus Event Reader is an Impact service running regularly by

default every 3 seconds in order to connect Netcool/OMNIbus for getting events

stored in the Objectserver memory that might be affecting TBSM service tree

elements. It selects the events based on the following default filter:

(Class

<> 12000) AND (Type <> 2) AND ((Severity <>

RAD_RawInputLastValue) or (RAD_FunctionType = '')) AND (RAD_SeenByTBSM = 0)

AND (BSM_Identity <> '')

|

(Severity <> RAD_RawInputLastValue) is the condition

ensuring that event will be tested against containing a new value in the

Severity field comparing to the previous event.

The

Event Reader itself can be found in Impact UI server within Services page in

TIP among other services included in the Impact project called TBSM_BASE:

|

| Figure 2. Configuration of

TBSMOMNIbusEventReader service |

Make note. TBSM

allows you configuring other event readers but you can use just one same time.

All

incoming status rules use this Event Reader by default. There’s embedded

mapping between the name of the Event Reader and the Data source field in Status/Text/Numerical

rules, hence just “ObjectServer” caption occurs in the new rule form:

|

| Figure 3. Screenshot of New

Incoming status rule form |

Policy activators

Impact

services of type Policy activators simply call a policy every defined period of

time and run that policy.

|

| Figure 4. Screenshot of a policy

activator service configuration for TBSMTreeRuleHeartbeat |

The policy needs to be created earlier. In order to trigger

TBSM rules, it is required to call PassToTBSM() function and contain in its

argument an object. Let’s say this is my TBSMTreeRulesHeartbeat policy:

Seconds = GetDate();

Time = LocalTime(Seconds, "HH:mm:ss");

randNum = Random(100000);

ev=NewEvent("TBSMTreeRuleHeartbeatService");

ev.timestamp = String(Time);

ev.bsm_identity = "AnyChild";

ev.randNum = randNum;

PassToTBSM(ev);

log(ev);

|

In my example, a new value generates every time when the

policy is activated by using GetDate() function. Pay attention to field called

ev.bsm_identity. I’ll be referring to this field later on. For simplification

this field has always value “AnyChild”.

Make note. Unlike

TBSM OMNIbus event reader, the policy activating services, also policies

themselves don’t have to be included in TBSM_BASE impact project.

Netcool/Impact policies give you freedom of reaching to any

data source, via SQL or SOAP or XML or REST or command like or anyhow you like,

and processing any data you can see useful to process in TBSM. The only

requirement is passing that data to TBSM via PassToTBSM function.

TBSM data

fetchers

TBSM datafetchers combine an Impact policy with DirectSQL

function call and Impact policy activator service. Additionally, data fetchers

have a mini concept of schedule, it means you can set their run time interval

to specific hour and minute and run it once a day (i.e. 12:00 am daily). It

also allows postponing or rushing next runtime in case of longer taking SQL

queries.

Make note. Data

fetchers can be SQL fetchers, ITM policy fetchers or any policy fetchers,

unfortunately the TBSM GUI was never fully adjusted to reconfigure ITM policy

fetcher and was never enabled to allow configuration of any policy fetchers and

in case of the last two you’ve got to perform few manual and command-line level

steps instead. Fortunately, the PassToTBSM function available in Impact 6.x

policies can be used instead and the any-policy fetchers aren’t that useful

anymore.

Every fetcher by default runs every 5 minutes.

|

| Figure 5. Screenshot of data

fetcher Heartbeat fetcher |

In the example presented on the screenshot above data

fetcher connects DB2 database and runs DB2 SQL query. The new value is ensured

every time in this example by calling the native DB2 random function and the

whole query is the following:

select 100000*rand() as

"randNum", 'AnyChild' as "bsm_identity" from sysibm.sysdummy1

|

Pay attention to field bsm_identity. It always returns the

same value “AnyChild”, just like the policy explained before.

Triggering

status, numerical, text and formula rules

In

the previous chapter I presented various rules triggering methods. It’s now

time to show how those triggers work in real. I’ll create a template with 5

numerical and text rules (I don’t want to change any instance status, so I

won’t create any incoming status rule this time) and additionally with 3

supportive formulas and I’ll present the output values of all those rules on a

scorecard in Tivoli Integrated Portal. Below you can see my intended template

with rules:

|

| Figure 6. Screenshot of

t_triggerrulestest template with rules |

OMNIbus Event Reader-based rules

Let’s start from 2 rules utilizing the TBSM OMNibus Event

Reader service. Like I said, I won’t use a status rule, but I’ll use one text

and one numerical rule, in order to return the last event’s severity and the

last event’s summary. Before I do it, let me configure my simnet probe that

will be sending random events to my ObjectServer. My future service instance

implementing the t_triggerrulestest template will be called TestInstance (or at

least it will have such a value in its event identifiers), I want one event of

each type and various probability that such event will be sent:

#######################################################################

#

#

Format:

#

# Node Type Probability

#

#

where Node => Node name for

alarm

# Type =>

0 - Link Up/Down

# 1 - Machine Up/Down

# 2 - Disk space

# 3 - Port Error

# Probability => Percentage (0 -

100%)

#

#######################################################################

TestInstance

0 10

TestInstance

1 15

TestInstance

2 20

TestInstance

3 25

|

Let’s

see if it works:

|

| Figure 7. Screenshot of Event

Viewer presenting test events sent by simnet probe. |

So now my two rules, I marked important settings with

green. So my Data feed is ObjectServer, the source is SimNet probe, the field

containing service identifiers is Node and the output value taken back is

Severity:

|

| Figure 8. Screenshot of the rule

numr_event_lastseverity form |

Same here, this time the rule is Text rule and I have a

fancy output expression, it is:

|

'AlertGroup: '+AlertGroup+',

AlertKey: '+AlertKey+', Summary: '+Summary

|

|

The

rule itself:

|

| Figure 9. Screenshot of the rule

txtr_event_lastsummary |

Fetcher-based rule

That was easy. Now something little bit more complicated,

the data fetcher. I already have my datafetcher created and show above in this

material, let’s check if it works fine, the logs shows the fetcher is fine,

i.e. if it fetches data every 5 minutes:

1463089289272[HeartbeatFetcher]Fetching from

TBSMComponentRegistry has started on Thu May 12 23:41:29 CEST 2016

1463089289287[HeartbeatFetcher]Fetched

successfully on Thu May 12 23:41:29 CEST 2016 with 1 row(s)

1463089289287[HeartbeatFetcher]Fetching duration:

00:00:00s

1463089289412[HeartbeatFetcher]1 row(s) processed

successfully on Thu May 12 23:41:29

CEST 2016. Duration: 00:00:00s. The entire process took 00:00:00s

1463089589412[HeartbeatFetcher]Fetching from

TBSMComponentRegistry has started on Thu May 12 23:46:29 CEST 2016

1463089589427[HeartbeatFetcher]Fetched

successfully on Thu May 12 23:46:29 CEST 2016 with 1 row(s)

1463089589427[HeartbeatFetcher]Fetching duration:

00:00:00s

1463089589558[HeartbeatFetcher]1 row(s) processed

successfully on Thu May 12 23:46:29

CEST 2016. Duration: 00:00:00s. The entire process took 00:00:00s

|

And

the data preview looks good too:

|

| Figure 10. The Heartbeat fetcher

output data preview |

My next rule will be just one and it will be a

numerical rule to return the randNum value, I marked important settings in

green again, so I select HeartbeatFetcher as the Data Feed, I select

bsm_identity as service event identifier and randNum as the output value:

|

| Figure 11. Screenshot of

numr_fetcher_randNum rule |

Policy activated rules

Last but not least I will create two rules getting data from

my policy activated by my custom Impact Service. I did show the policy and the

service in the previous chapter, let’s just make sure they both work ok. This

is how the service works, every 5 minutes I get my policy activated and every

time it returns another value in the randNum field:

12 maj 2016 23:41:29,652:

[TBSMTreeRulesHeartbeat][pool-7-thread-87]Parser log:

(PollerName=TBSMTreeRuleHeartbeatService, randNum=71648, timestamp=23:41:29,

bsm_identity=AnyChild)

12 maj 2016 23:46:29,673:

[TBSMTreeRulesHeartbeat][pool-7-thread-87]Parser log:

(PollerName=TBSMTreeRuleHeartbeatService, randNum=8997, timestamp=23:46:29,

bsm_identity=AnyChild)

12 maj 2016 23:51:29,674: [TBSMTreeRulesHeartbeat][pool-7-thread-91]Parser

log: (PollerName=TBSMTreeRuleHeartbeatService, randNum=73560,

timestamp=23:51:29, bsm_identity=AnyChild)

12 maj 2016 23:56:29,700:

[TBSMTreeRulesHeartbeat][pool-7-thread-91]Parser log: (PollerName=TBSMTreeRuleHeartbeatService,

randNum=60770, timestamp=23:56:29, bsm_identity=AnyChild)

13 maj 2016 00:01:29,724:

[TBSMTreeRulesHeartbeat][pool-7-thread-92]Parser log:

(PollerName=TBSMTreeRuleHeartbeatService, randNum=55928, timestamp=00:01:29,

bsm_identity=AnyChild)

|

Let’s

then create the rules. I will have two rules again, one numerical and one text.

The numerical rule will have the TBSMTreeRuleHeartbeatService as the Data Feed,

the bsm_identiy field will be selected as the service event identifier field

and randNum field will be my output:

|

| Figure 12. Screenshot of

numr_heartbeat_randNum rule |

Make

note. Every time you add another field to your policy

activated by your service, make sure that new field is mapped to the right data

type in the Customize Fields form. You will need to add that field first:

|

Figure 13.

Screenshot of CustomizedFields form

|

And the second rule looks the following, this time it

is a text rule and I return the timestamp value:

|

| Figure 14. Screenshot of

txtr_heartbeat_lasttime rule |

Formula rules

The

last rules I’ll create will be three formula, policy-based, text rules. Each of

them will go to another rules create previously and “spy” on their activity.

Let’s see the first example:

|

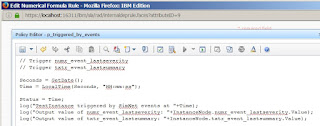

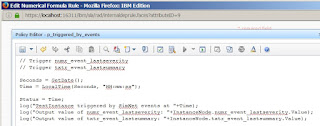

| Figure 15. Screenshot of

nfr_triggered_by_events rule |

This rule will use policy and will be a text rule. It is

important to mark those fields before continuing, later the Text Rule field

greys out and inactivates. After ticking both fields I click on the Edit policy

button. All three rules will look the same at this level; hence I won’t include

all 3 screenshots as just their names will differ. I’ll create another policy

in IPL for each of them. Here’s the mapping:

Rule name

|

Policy name

|

nfr_triggered_by_events

|

p_triggered_by_events

|

nfr_triggered_by_fetcher

|

p_triggered_by_fetcher

|

nfr_triggered_by_service

|

p_triggered_by_service

|

Each policy of those three will look similar, it will just

look after different rules created so far. The p_triggered_by_events policy

will do this:

// Trigger numr_event_lastseverity

// Trigger txtr_event_lastsummary

Seconds = GetDate();

Time = LocalTime(Seconds, "HH:mm:ss");

Status = Time;

log("TestInstance triggered by SimNet events

at "+Time);

log("Output value of numr_event_lastseverity:

"+InstanceNode.numr_event_lastseverity.Value);

log("Output value of txtr_event_lastsummary:

"+InstanceNode.txtr_event_lastsummary.Value);

|

Policy p_triggered_by_fetcher will do this:

// Trigger numr_fetcher_randnum

Seconds = GetDate();

Time = LocalTime(Seconds, "HH:mm:ss");

Status = Time;

log("TestInstance triggered by HeartbeatFetcher at "+Time);

log("Output value of numr_fetcher_randnum: "+InstanceNode.numr_fetcher_randnum.Value);

|

And policy p_triggered_by_service this:

// Trigger numr_heartbeat_randnum

// Trigger txtr_heartbeat_lasttime

Seconds = GetDate();

Time = LocalTime(Seconds, "HH:mm:ss");

Status = Time;

log("TestInstance triggered by TBSMHeartbeatService at "+Time);

log("Output value of numr_heartbeat_randnum: "+InstanceNode.numr_heartbeat_randnum.Value);

log("Output value of txtr_heartbeat_lasttime: "+InstanceNode.txtr_heartbeat_lasttime.Value);

|

You can notice that each policy starts from a comment

section. This is important. This is how the formula rules get triggered. It is

enough to mention another rule by its name in a comment to trigger your formula

every time that other referred rule returns another output value. This is why

we have the randnum-related rules in every formula. Those rules are designed to

return another value every time they run. Just the first rule isn’t the same,

but I assume it will trigger every time a combination of Summary, AlertGroup

and AlertKey fields value in the source event changes.

The trigger numerical or text rules are also mentioned later

when these policies call them and obtain their output values in order i.e. to

put those values into log file. But it is not necessary to trigger my formulas.

I log those trigger text and numerical rules outputs for troubleshooting

purposes only.

The purpose of these 3 policies and 3 formulas is to report

on time when the numerical or text values worked for the last time.

Below

you can see an example of one of the policies in actual form.

|

| Figure 16. Screenshot of one of the

policies text |

Testing the triggers

Now it’s time to test the trigger rules, the triggers and

troubleshoot in case

Triggering

rules in normal operations

In

order to do that we will need a service instance implementing our newly created

template. I call it TestInstance and this is its base configuration:

|

| Figure 17. Screenshot of

configuration of service instance TestInstance – templates |

It is important to make sure that the right event

identifiers were selected in the Identification Fields tab. I need to remember

what bsm_identity I set in all rules, it is mainly AnyChild (the policy and the

fetcher) and TestInstance (the SimNet probe).

|

Figure 18.

Screenshot of configuration of service instance TestInstance – identifiers

|

Make note. In

real life your instance will have its individual identifiers like TADDM GUID or

ServiceNow sys_id. It is important to find a match between that value and the

affecting events or matching KPIs and if this is necessary to define new

identifiers, which will ensure such a match.

Let’s

see if it works in general. I created a scorecard and a page to present on all

values of my new instance. I’ll put on top also fragments of my formula related

policy logs to see if the data returned in policies and timestamps match:

|

| Figure 19. Screenshot of the

scorecard with policy logs on top |

Let’s take a closer look at the first section. Same

event arrived just once but since formula is triggered by two rules it was

triggered twice in a row. In general the last event arrived at 20:27:00 and its

severity was 4 (major) and the summary was on Link Down. Both rules

numr_event_lastseverity and txtr_event_lastsummary triggered m formula correctly.

The next section is about the fetcher, the latest

random number is 16589,861 and the rule numr_fetcher_randnum triggered my

formula correctly.

The last is the policy activated rule and formula,

let’s see. This time I have two rules again and they both triggered the formula

correctly. The last run was at 20:26:30. I have two different randnum values in

both runs. This is caused by referring to numerical rules twice in my formula

policy.

Triggering

rules after TBSM server restart

I’ll now show a problem that TBSM has with rules that are

not triggered by any trigger. Like I said in the previous chapters, TBSM needs

rules to be triggered every now and then but also the value to change between

triggers, in order to return the value again.

It causes some issues in TBSM server restart situations. If

a value hasn’t changed before server restart and is still the same after the

restart, TBSM may be unable to display or return it correctly, if the rule used

to return it is not triggered. Server restart situation means clearing TBSM

memory so no output values of no rules are preserved for after the server

restart.

Here’s an example. I’ll create one new formula rule with

this policy in my test template:

Status = ServiceInstance.NUMCHILDREN;

log("Service instance

"+ServiceInstance.SERVICEINSTANCENAME+"

("+ServiceInstance.DISPLAYNAME+") has children: "+Status);

|

Here’s

the rule itself:

|

Figure 20.

Screenshot of nfr_numchildren rule configuration

|

As next step, I add one more column to my scorecard to

show the output of the newly created rule. I also created 3 service instances

and made them a child to TestInstance instance.

|

Figure 21.

Screenshot of the scorecard shows 3 children count

|

Also my formula policy log will return number 3:

13 maj 2016 12:17:56,664:

[p_numchildren][pool-7-thread-34 [TransBlockRunner-2]]Parser log: Service

instance TestInstance (TestInstance) has children: 3

|

Now,

if I only restart TBSM server, the value shown will be 0 and I will see no new

entry in the log:

|

| Figure 22. Screenshot of the

scorecard after server restart shows 0 children |

I can change this situation by taking one of three actions:

- Adding

new or removing old child instances from Testinstance

- Modifying

the formula policy

- Introducing

a trigger to the formula policy

However two first options don’t protect me from another

server restart situation occurring.

Let’s say I add another child instance. This is how the

scorecard will look like:

Before the restart

|

However, after the restart

|

|

|  |

Or I may want to modify my rule. After saving my changes,

the value will display correctly. However another server restart will reset it

back to 0 again anyway.

So let’s say I change my policy to this:

Status = ServiceInstance.NUMCHILDREN;

log("Service instance

"+ServiceInstance.SERVICEINSTANCENAME+"

("+ServiceInstance.DISPLAYNAME+") has children: "+Status);

log("Service instance ID:

"+ServiceInstance.SERVICEINSTANCEID);

|

And my policy log now contains two entries per run:

13 maj 2016 12:56:07,023:

[p_numchildren][pool-7-thread-4 [TransBlockRunner-1]]Parser log: Service

instance TestInstance (TestInstance) has children: 4

13 maj 2016 12:56:07,023:

[p_numchildren][pool-7-thread-4 [TransBlockRunner-1]]Parser log: Service

instance ID: 163

|

But the situation before and after the restart is the same:

Before the restart

|

After the restart

|

|

|  |

It’s not a frequent situation though. If your rules are

normally event-triggered rules or data fetcher triggered rules you can expect

frequent updates to your output values even after your TBSM server

restarts. Just in case you want to

present an output value in a rule that normally is not triggered, make sure you

include a reference to a trigger in your rule. Let’s use one of the triggers we

configured previously in my new formula policy:

// Trigger by numr_fetcher_randnum

// Trigger by numr_heartbeat_randnum

Status = ServiceInstance.NUMCHILDREN;

log("Service instance "+ServiceInstance.SERVICEINSTANCENAME+" ("+ServiceInstance.DISPLAYNAME+") has children: "+Status);

log("Service instance ID: "+ServiceInstance.SERVICEINSTANCEID);

|

You can already notice by following the log of the policy

that there are many entries per every policy run, precisely as many entries as

many times the formula was triggered by one of the trigger rules.

The first pair of entries was added after saving the rule.

The next 2 pairs were added in result of the triggers working fine:

13 maj 2016 13:22:36,837:

[p_numchildren][pool-7-thread-3 [TransBlockRunner-1]]Parser log: Service

instance TestInstance (TestInstance) has children: 4

13 maj 2016 13:22:36,837:

[p_numchildren][pool-7-thread-3 [TransBlockRunner-1]]Parser log: Service

instance ID: 163

13 maj 2016 13:24:12,833:

[p_numchildren][pool-7-thread-3 [TransBlockRunner-1]]Parser log: Service

instance TestInstance (TestInstance) has children: 4

13 maj 2016 13:24:12,833:

[p_numchildren][pool-7-thread-3 [TransBlockRunner-1]]Parser log: Service

instance ID: 163

13 maj 2016 13:24:18,465: [p_numchildren][pool-7-thread-3

[TransBlockRunner-1]]Parser log: Service instance TestInstance (TestInstance)

has children: 4

13 maj 2016 13:24:18,465:

[p_numchildren][pool-7-thread-3 [TransBlockRunner-1]]Parser log: Service

instance ID: 163

|

Let’s make the final test, so TBSM server restart:

Before the restart

|

After the restart

|

|

|  |

This excercise ends my material for tonight. I'll continue in another material on triggering the status propagation rules and numeric aggregation rules. See you soon!

mp